Running out of GPUs

OpenAI and Microsoft Azure can't keep up with the demand for GPT-4: they don't have enough GPUs.

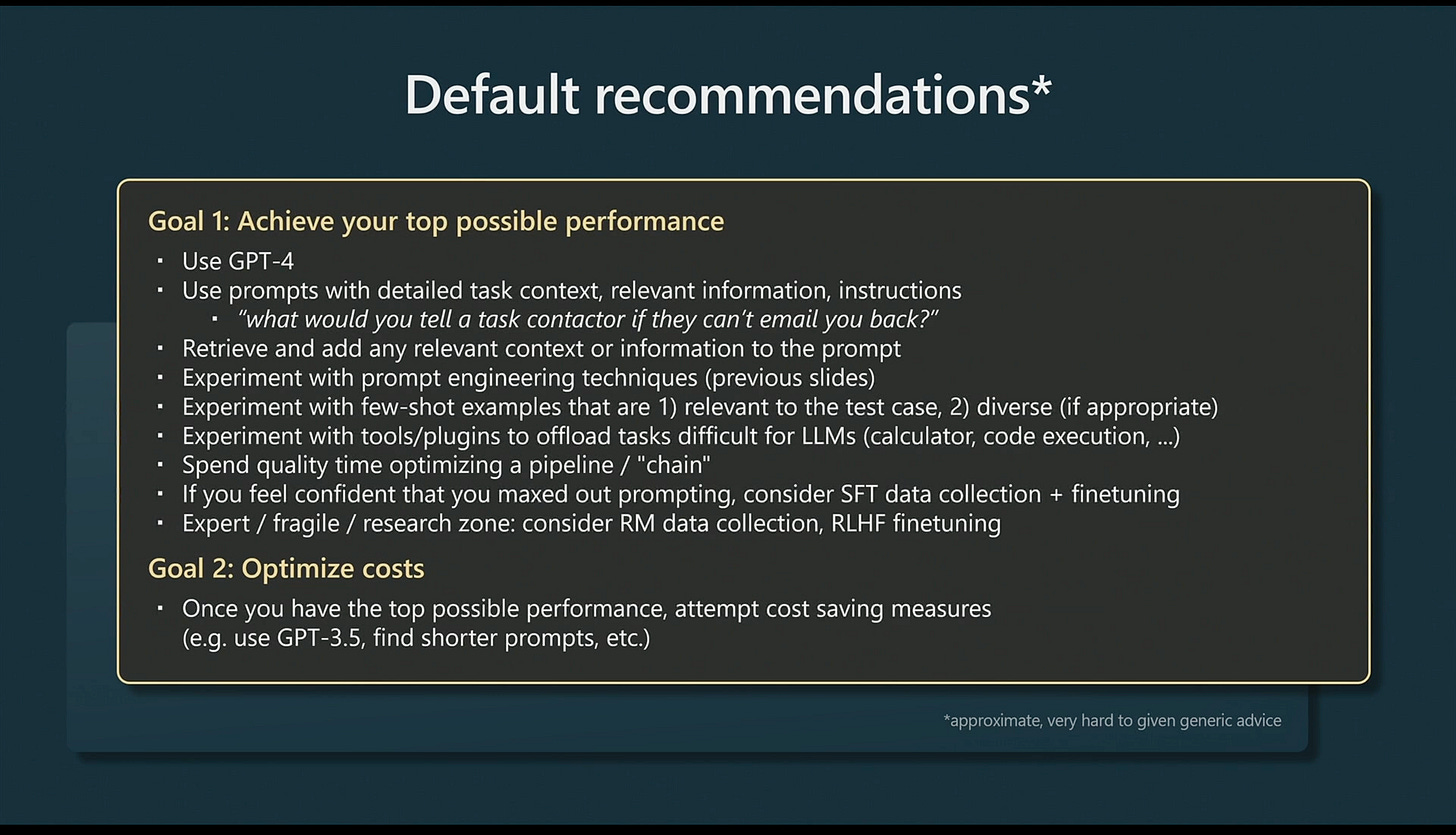

What is the best and fastest way to prototype products with LLM under the hood? As Andrej Karpathy presented at Microsoft Build 2023, first you achieve your top performance by employing best possible model - GPT-4.

This is nice in theory and makes a lot of sense. In practice, it could be tricky to follow that advice.

This is an email that Microsoft Azure sent a few days ago to a small company applying for GPT-4:

Please note that we are currently paused on onboarding new GPT-4 customers due to the high demand and do not have an estimated time for when we will onboard new customers.

Microsoft Azure doesn’t have compute capacity to keep up with the demand for GPT-4. GPUs are the culprit here - suddenly everybody needs dedicated ML hardware in large quantities.

Being locked with Azure for hosting, OpenAI itself is also impacted. Sam Altman talked about that recently at a dev meeting: “OpenAI is heavily GPU limited at present”.

This GPU scarcity manifests in 3 ways:

Onboarding of new customers is limited. New features are delayed (e.g. larger context windows and fine-tuning).

Company growth and development stall, since it is impossible to serve new customers.

Existing GPT-4 customers report performance issues and rate limiting even with small workloads.

I heard stories of companies automatically switching between GPT-4 regions (e.g. EU to USA and back), just to avoid hitting rush hours and performance problems.

I think the situation isn’t going to improve any time soon for customers of Microsoft Azure or OpenAI. The same limitation applies to the other large vendors as well. Too much demand and not enough NVidia GPUs available.

Even if some other company could secure enough GPUs to train a good foundational model, it would still be starved for hardware to serve this model to all the customers. If you can’t handle the demand - you can’t grow.

In the short term we could cross off large model provides from the list of reliable and scalable providers of powerful language models. GPT-4 would be great for small-scale prototyping, but that is just it.

This doesn’t mean you shouldn’t sign up for all possible waitlists in advance, just in case. On the contrary - do it, earlier the better:

AWS Bedrock - Amazon equivalent of Azure OpenAI, which is supposed to host Claude.

Go ahead, do that right now! GPT-4/Claude still give you the edge over the competition. Why? They are still the best tool for early stage product development. You could prototype quickly, implement your AI-driven feature ideas and collect feedback.

In the next newsletter I’m going to talk about later stages of the product development, where you need stability, scale and predictable costs. We’ll take a deeper look at the other side of the spectrum that is thriving in the world of scarce GPUs - small local language models.