On new LLMs, their irrelevance and potential of ChatGPT

Let’s catch up! We’ll talk about:

A few notable large language model releases

Why all of these models are already irrelevant

Product-specific LLM benchmarks

On the potential of ChatGPT

Answering GPT-related questions

New LLM releases

There were a lot of model releases in the past days. Below are a few notable ones:

HuggingChat - 30B model based on LLaMa tuned with OpenAssistant dataset. Tricky to download, but possible. Model uses OpenAssistant dataset which could help to tune models to become like ChatGPT. More models will use it.

Replit code completion model - this is special, because they took a small model and heavily tuned it on a large code dataset. This pays off, because it allows the model to be faster and cheaper. More companies will be following this approach in the next months.

MPT-1b-RedPajama-200b - this is a tiny model from Mosaic ML. This model is a waste of space and energy that was done as a public stunt. However it is one of the few models that use Red Pajama dataset - new clean-room, fully open-source implementation of the LLaMa dataset. What does Red Pajama mean? Expect really good LLM models that you can legally use. Probably in the next months.

StabilityAI (folks behind StableDiffusion) started releasing versions of new open-source model called StabilityLM. Smaller versions are already available for downloading and running locally. They are remixing good datasets into good models.

These are the models from the last 10 days that come from the top of my head. There were a lot more.

All of these models are irrelevant on a large scale!

Why? Because we are in a very interesting moment in history. A lot of companies are investing huge resources in training large language models. This pushes the edge forward and makes models get obsolete really fast. Either you are at the top or nobody will care about your model.

Does you even remember the name of that large language model from Google that was supposed to compete with ChatGPT4? It is still an experiment that few really care about.

Imagine you are a company that has been training some new language model for the last couple of months. What would you do if somebody else has just announced or released a much better model? You could at least try releasing it publicly to get some free publicity and PR from it.

This is what Meta has done with LLaMa model. This is why we are getting so many public language model releases each day. This is also the reason why actual models on the list above are irrelevant - better model versions will come out in the next weeks.

What does this mean for the product development strategy around ML/GPT?

More efficient and performant models will show up in near future.

It will be easier to run a powerful model locally.

It will be easier than ever to fine-tune LLM to your needs (e.g. see Lamini).

How can we plan for such a future?

Prototype against the best possible models now, even if they are an expensive service (OpenAI GPT3.5/GPT4 at the moment). You need to build momentum and in-house expertise.

Gather all possible data that comes from the interactions with these models. If your product survives, you could later use that data to fine-tune your own models.

Build a set of LLM benchmarks that are specific to ML features in your products.

Let’s focus on that last “product-specific LLM benchmarks” part for a second.

Product-specific LLM benchmarks

What is a product-specific LLM benchmark? It is a test the evaluates how well LLM prompts from your product work against a specific model.

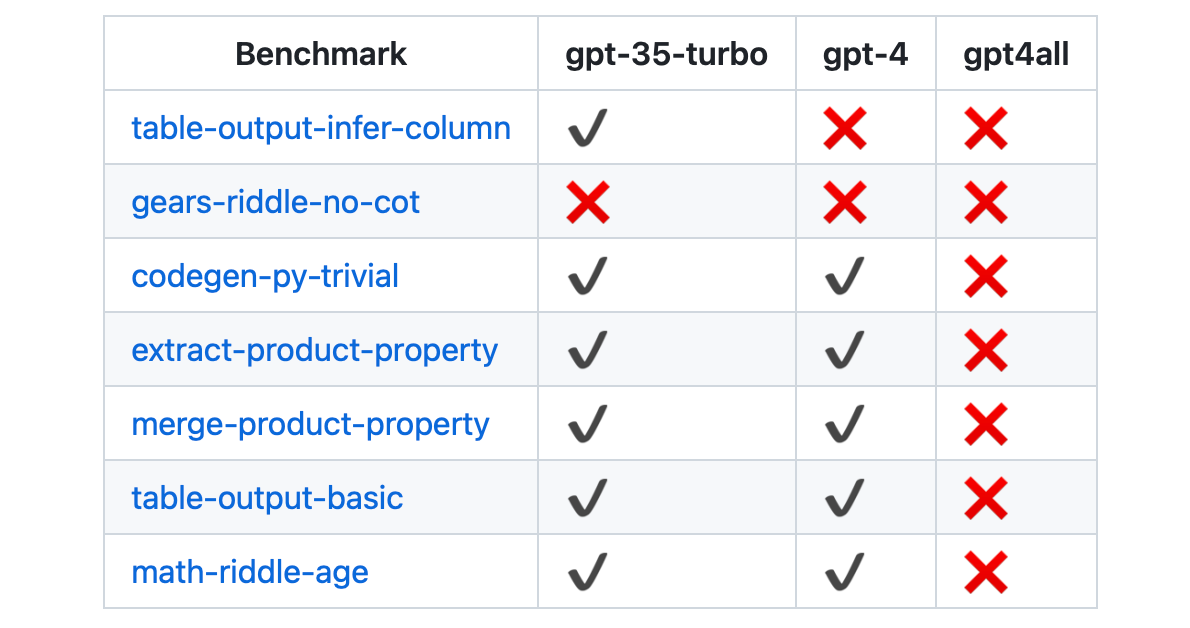

A picture is worth a thousand tokens, so here is an early example:

Rows represent various LLM capabilities expressed as separate prompts. ML-driven features usually depend on a couple of capabilities at once.

Columns show how well a specific LLM model can carry out the task in the prompt.

As you can see GPT3.5 fails one of the benchmarks, GPT4 fails two (because it was too creative with the answer) and GPT4ALL model running locally fails all benchmarks.

Such benchmarks are like unit tests that protect your LLM-dependent features from regressions. They let you optimise different parts of LLM pipeline by picking the best models for them to run on (also cheapest and most secure).

These benchmarks are developed at Trustbit, so expect a more detailed blog post soon. I’ll link to that in a due time.

Meanwhile, here is a bit of exciting news. Did you know that StableLM-7B model already passes some of product-specific LLM benchmarks? It isn’t as intelligent as GPT-4 (or even GPT3.5), but it runs locally.

This is only a start.

On the potential of ChatGPT

If you are interested where this ChatGPT story is heading product-wise, check out the first half of this TED video: The Inside Story of ChatGPT’s Astonishing Potential.

Greg Brockman is one of the founders of OpenAI. He does an impressive live demo of what is it like to use ChatGPT with plugins.

You can skip the panel - there is nothing particularly valuable there.

Here is another example that highlights potential of ChatGPT. This WebUI was crafted by a prompt:

There is a blog post that describes the process of creating an app with ChatGPT from the scratch: How ChatGPT (GPT-4) created a Social Media Posts Generator Website. You can find prompts on Github.

Answering GPT-related questions

Here are a few new questions and answers:

If you have any other questions, don’t hesitate to leave them in the comments.

Wishing you a great long weekend!