On ChatGPT-4, Mistral 7B OpenChat and OpenAI going vertical

Rate of the progress keeps up. Not all of it is locked behind OpenAI

Here comes an executive summary of the most important things that happened since the last newsletter.

As a reminder: we mentioned multimodal capabilities of ChatGPT and a new promising model called Mistral 7B. Both of these have further developments in this newsletter.

OpenAI releases new GPT versions

The new models and product features from OpenAI are all across the news. They have released GPT 4 Turbo (successor to the best in the world model) and refreshed GPT 3.5 Turbo (cheap model that is still best in the class). Here is their announcement.

OpenAI improved both models by making them cheaper, at the cost of the quality. Probably, their goal was even to make the models as cheap as possible without getting too many complaints.

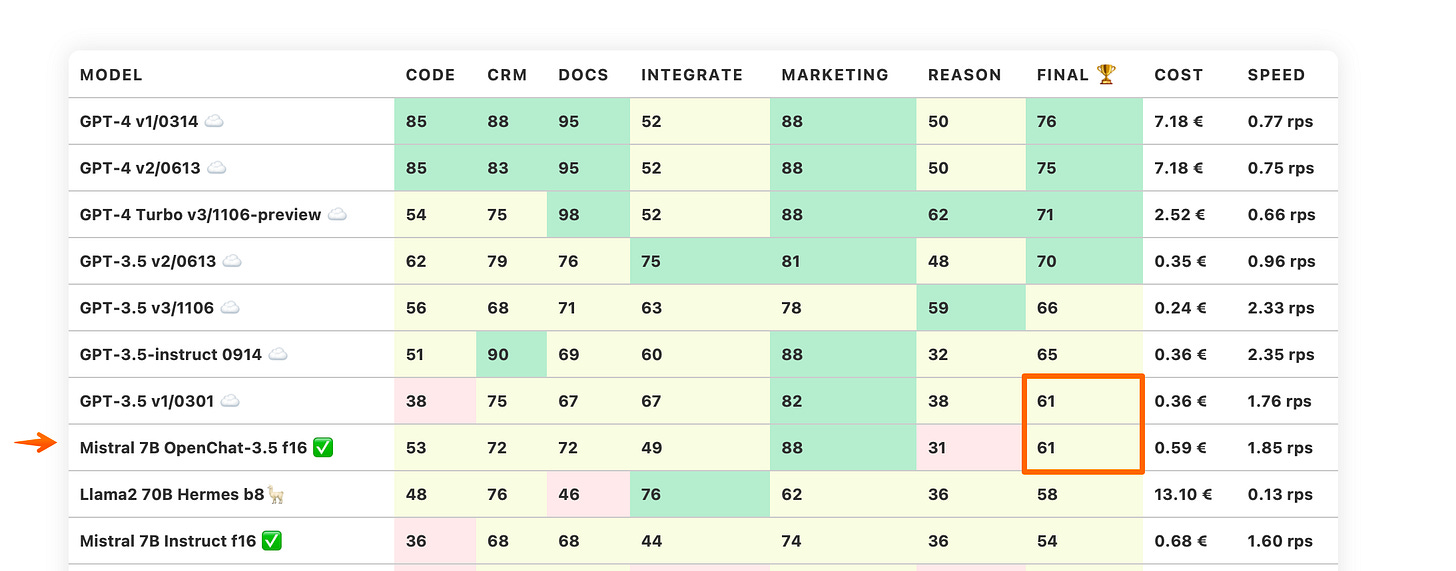

Here are the numbers from the upcoming Trustbit LLM Benchmark for November.

Both models were also refreshed with the new data. The models are now aware of the things that happened in the world up to the April 2023. This makes them more useful for working with the code, libraries and software.

Surprisingly enough, GPT 4 got significantly better in understanding niche languages and cultures. Previously it was excellent with the English, good enough with similar languages like German, Spanish and French. However, if you tried to converse with it in a language spoken “only” by 1M people in the world, you probably were out of luck. It was stupid.

According to the linguistic communities, GPT-4 Turbo can now speak and understand such small languages much better. I’m working with experts to build a small benchmark, verify and measure these claims.

OpenAI goes vertical

In addition to model refreshes, OpenAI released new capabilities that build on top of their language models:

GPT 4 gets much bigger context window, it can remember up to 128k tokens (large book). This is bigger than 100k context window of Claude Anthropic.

GPT 4 gets ability to work with images, gets priced based on the number of pixels. Image of 768*768 pixels would cost $0.00765 to process

OpenAI starts blurring the terminology and introduces “Chat GPTs” - apps on top of ChatGPT that come preconfigured with additional data, documents and access to more services. Naturally, there will be “GPT Store”.

In addition to the apps, there will also be Assistants API - direct programmatic access to some of these capabilities.

Long story short, OpenAI plays the AWS handbook. First it got the basic building blocks. Now it starts building services on top to capture the most interesting business cases. Unique access to the technology and use case data only help them here.

There are a lot of startups that act as thin wrappers around GPT, like “Chat with your PDF”. It is curious that they aren’t shedding tears for being driven out of the business. On the contrary, their margins got 2-3 times better (models got cheaper) and there are more tools for delivering business value to the customers.

It is win-win situation so far. There are too many niche business cases and scenarios for the AI that don’t have convenient tools so far. OpenAI can’t serve them all.

For example, I had to build my own tool just to comb through the submissions to a webinar and pick the ones that would get the most value out of it.

Mistral 7B OpenChat catches up with ChatGPT 3

I wrote in my previous newsletter about the new open-source model called Mistral 7B:

capabilities of Mistral 7B outperform not only 13B models on product benchmarks, it also is competitive with 70B models. All of that, while being much cheaper to run.

Being open-source is a huge innovation factor these days. Suddenly you get the rest of the world helping you to push the state of the art.

Today we have a new fine-tuned version of Mistral 7B called OpenChat-3.5. Not only it beats LLama2 70B Hermes, but it also catches up with the first GPT-3.5 model.

Mistral 7B OpenChat is already quite good and cheap to run. For example, given a task of producing search keywords for products in a catalogue, it can run at the speed of 15-20 products per second on a commodity server with NVidia GPU 3090.

New ML labs content: Vocabulary for the Busy

As a newsletter subscriber you get access to new content from ML Product labs. This release includes a brief explanation of the most important terms around LLM/ChatGPT for the busy people.

Please ping me, if you would like more terms to be added there.