New LLM Benchmarks, Enterprise AI Challenge

Hello, my Dear Reader. Long time no see, so let’s catch up.

Today I would like to talk a bit more about the new flavours of Large Language Models. Then, move one step higher and talk about LLM-driven systems for business - where is market heading in the next few quarters? And how can we measure the progress together?

Anthropic Claude 3.0 - Amazing Jump in Quality

First of all, I would like to take a moment and marvel at a huge jump that Anthropic has achieved with its third generation of Large Language Models, especially Opus and Haiku.

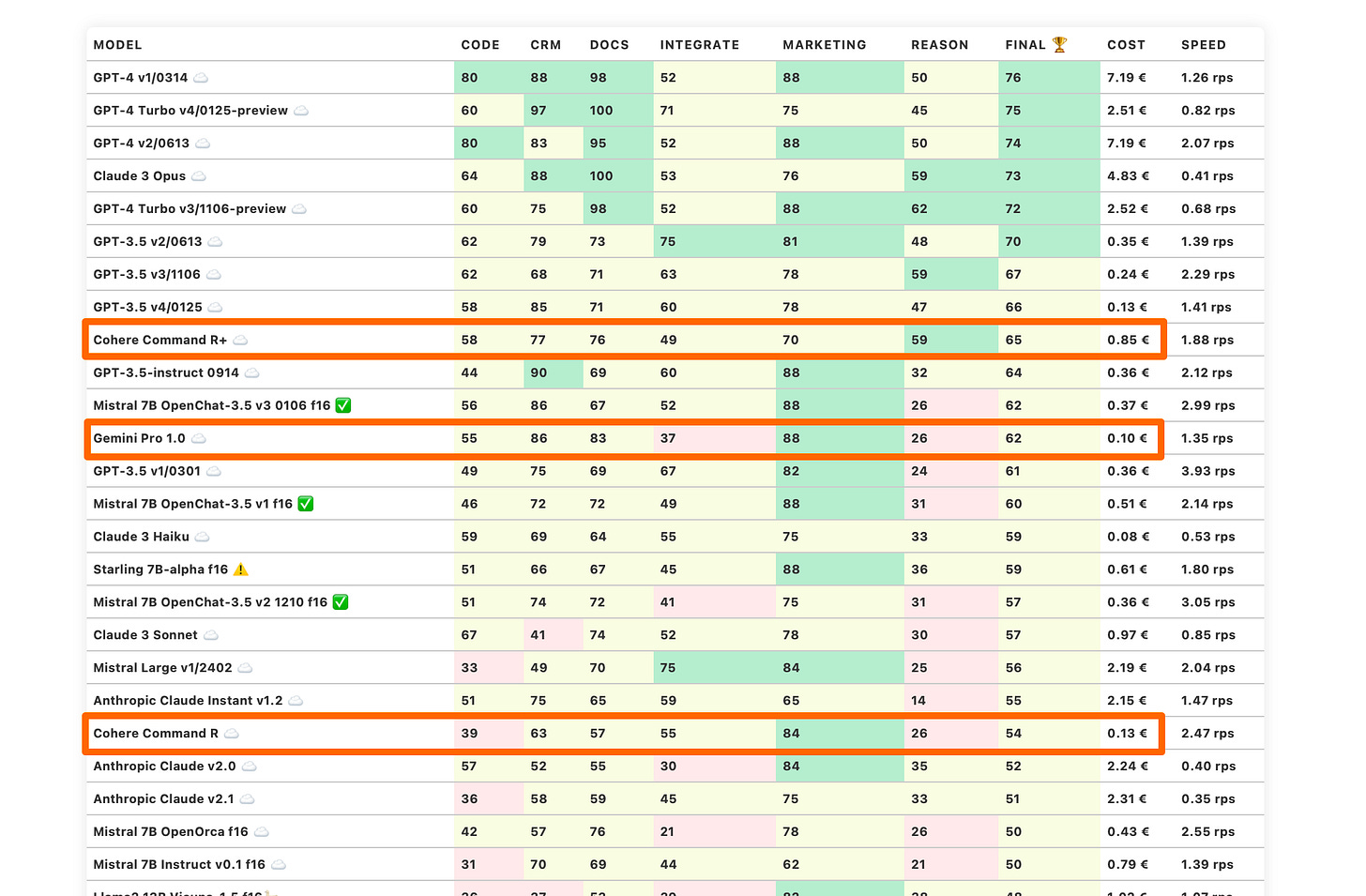

Claude 3 Opus is the first LLM that has managed to beat one of GPT-4 versions on Trustbit LLM Benchmarks. It is also the first model that scored the perfect 100 score in “Docs” category.

Docs category covers LLM tasks and capabilities related to working with documents and playing nicely with Retrieval-Augmented Generation tasks (or RAGs).

Claude 3.0 Haiku didn’t go as high as Claude 3 Opus, but it achieves an outstanding price/performance ratio. This tiny model reaches the same class as Gemini Pro 1.0.

A lot of practitioners building LLM-driven systems swear by Claude v3 models and claim that they are better than GPT-4 on their tasks.

Other notable LLMs

There are a few other notable LLM releases that are interesting:

They don’t break any records, but create pressure for the GPT-3.5, beating the older models.

What about Grok and Databricks LLMs?

Grok is the LLM from Twitter (x.ai) and DBRX is a Databricks LLM from the Mosaic Research Team. Both large language models are downloadable and claim to push the state of the art.

Both are just PR stunts that nobody will really use.

Why?

Their reported quality is mediocre and cost of running is huge (314B and 132B parameter LLMs). These are not the kind of LLMs that the community will embrace and push forward, like this happened with Llama and Mistral models.

New functional capabilities of LLMs

Some of the new LLMs have new capabilities that this benchmark can’t even measure properly. For example:

Cohere Command-R models are designed to work with multiple languages, integrate well with document retrieval and use tools. They have context of 128k tokens

Claude 3 models have even larger context of 200k tokens, are multilingual and have vision capabilities. They are optimized for enterprise use-cases and large-scale deployments.

These new models reinforce the general trend:

Models get larger contexts over the time - they are designed to ingest a lot of data.

New LLM capabilities show up and become a standard - reading charts, analysing graphs and schematics, using additional tools.

New models are designed to be used as parts of the systems, not as standalone chat bots.

I think, this trend of building integrated language models will just continue, both for the cloud-hosted systems and for the locally-deployable models.

Classic LLM Benchmarks are good, but they alone are not enough to measure the future progress accurately and practically enough.

We need a new format that will help to evaluate performance of AI systems as a whole, not just the standalone LLMs.

Here comes the Enterprise AI Challenge

The idea is to run a friendly competition among practitioners and vendors of LLM-driven systems.

The first rounds of this competition will focus on the fundamental problem: let’s evaluate an AI system that can ingest a bunch of documents and answer predefined questions about them. In other words, RAGs.

Participants (more than 20 teams have already shown their interest) will need to setup a system that can answer questions based on the public annual reports of companies.

Types of the questions will be known in advance. For example “What was the liquidity of COMPANY_NAME at the end of YEAR?”

The only unknown part would be the specific questions and specific documents - these will be dervied publicly from a third party source of enthropy, using an auditable process.

(why bother with “auditable process” and “third-party source of enthropy”? Because I would like to participate in the fun as well :))

The challenge might look simple enough. After all, the same capability is already offered by a large number of vendors and software providers, starting from OpenAI Assistants and up to LlamaIndex and LangChain.

However, the devil is in the details - I expect there will be a significant variation in quality and stability of the answers between the systems. I hope that we’ll eventually be able to correlate these variations to different underlying architectures of LLM-driven systems. This could ultimately help to build a better shared understanding and push State of the Art further.

Sounds interesting? Stay tuned.